AI analytics you can trust

Powered by Omni's semantic model.

Use AI wherever you’re analyzing data

Chat

Chat

Ask questions in a natural language interface that keeps context from question to question.

Dashboard

Dashboard

Synthesize insights across charts and dig in further. Just ask!

Workbook

Workbook

Build queries with a prompt, and switch into point/click, SQL, or spreadsheets at any point.

MCP Server

MCP Server

Use Omni’s querying capabilities in your team’s chatbots and other external AI tools.

Learn more →

A suite of tools for any task

Run queries

Create calculations

Filter data

Build visualizations

Summarize results

Compare time periods

How our customers are using AI

Training Omni’s AI to understand their business with the semantic layer

"We looked at the questions people ask, like ‘How many tickets did Michael resolve last year?’ Then we taught the AI how to interpret names, attributes, and domain-specific language. Omni’s AI accuracy jumped overnight."

Read case study →

Enriching Omni’s AI with context from Notion and Cursor

"Omni’s AI does well because it’s backed by a structured data model. Now, it’s easier for us to train it like we would teach an analyst — giving it the right context to understand our business language and definitions."

Read case study →

Enabling business users to get accurate answers with AI

"When we asked AI to explain a chart for the first time and it gave us an accurate response, our team literally screamed out of excitement. People always ask for help interpreting data, and now Omni’s AI gives them a starting point and saves my team time."

Read case study →

Delivering in-app reporting with AI chat

"Omni gives our customers power without overwhelming them. And with AI, analyzing and understanding data within our platform is even more accessible to our diverse user base."

Read case study →Improve trust with a semantic model

Curate responses

Add instructions for datasets and fields.

Add context as you go

Adjust prompts in real-time.

Use existing metrics, joins, and metadata

Build for BI, re-use for AI.

Launch a new AI product

Customize with Omni’s MCP server and APIs

Surface insights to your customers using AI

Create custom pricing tiers

Our approach to AI

FAQs

How does Omni’s AI work?

Omni’s AI is integrated throughout the platform; you can use a standalone AI chat interface, dashboards, summary visualizations, and more to speed up everyday workflows. It operates on top of Omni’s semantic layer, which defines metrics, dimensions, relationships, and business logic in one shared model. With that understanding of your business, AI delivers consistent, trustworthy results.

When you ask a question, Omni’s AI uses that context to reason through the request, run the appropriate analysis, and return results with supporting evidence. It can handle open-ended questions and multi-step analysis instead of forcing users into single, isolated prompts.

Through Omni’s MCP server and APIs, customers can even bring Omni’s AI into other tools and workflows beyond the platform.

Why is Omni’s AI more accurate than other platforms?

Most AI analytics tools sit on top of raw tables or disconnected models, without any context on the business. That means they have to guess what metrics mean, how tables joins to each other, and which definitions to use. The result is answers that change depending on how a question is asked.

Omni’s AI is different because it’s grounded in Omni’s built-in semantic layer. It uses the same governed metrics, joins, and business logic your team already relies on for dashboards and reporting. On top of that, Omni lets you add additional AI context that lets you tune AI responses, like “Only use this dataset when asked about closed won deals.” That shared foundation ensures the AI is using the right definitions every time, across every user.

Does Omni use its own LLM, or does it leverage external LLMs?

Omni currently uses AWS Bedrock-hosted Claude models for most tasks and OpenAI models only for advanced AI visualizations. Omni does not have its own LLM. For more, see our docs.

How does Omni’s AI work behind the scenes?

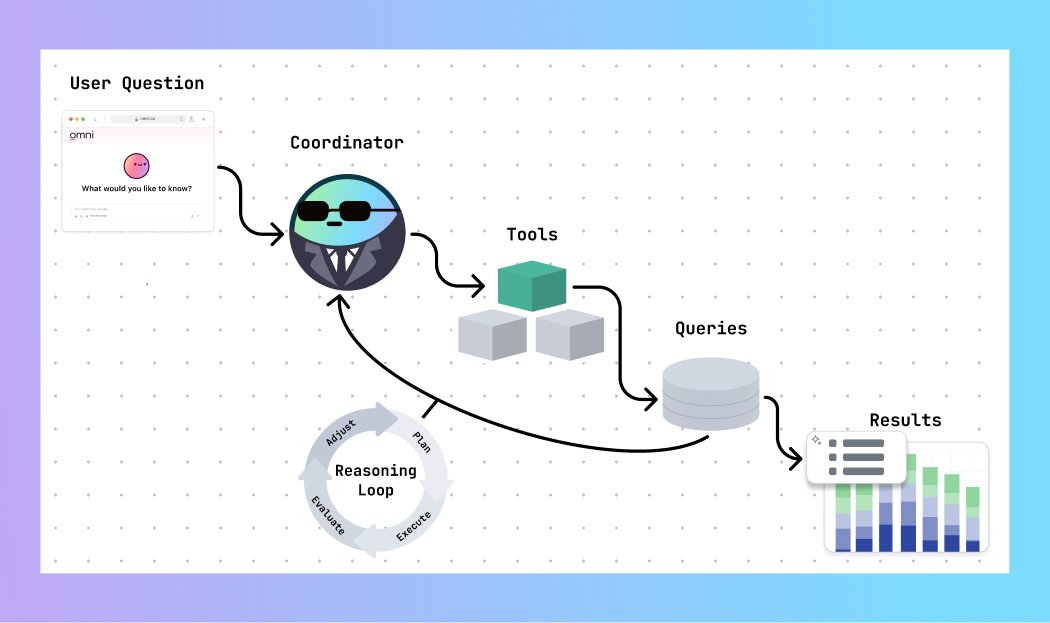

Omni’s AI is an agentic system that can reason through complex questions. At its core is a coordinator mechanism that plans actions, selects tools, executes queries, evaluates results, and decides what to do next. This enables multi-step analysis, such as checking assumptions, breaking a question into sub-queries, or validating unexpected results before summarizing.

The AI does not generate raw SQL directly from text; we found that this process can produce inconsistent results. Instead, the AI generates “semantic queries” through Omni’s semantic layer, which defines metrics, dimensions, joins, access controls, and AI-specific instructions. The semantic layer ensures that AI follow the same logic your team already uses; it will reuse existing data models, respect row-level security, and stay consistent across users. Omni translates the semantic query into SQL, runs it against your database, and returns the results to the AI to interpret for the user. You can always view the SQL used for AI responses by opening the query in a workbook.

By taking this approach, Omni’s AI behaves more like an automated analyst operating inside Omni’s architecture, rather than a generic language model guessing at answers. This gives you the governance and control of a robust analytics platform with the simple, easy-to-use interface of a chatbot.

How does Omni’s AI compare to other AI tools?

Omni’s semantic model lets you feed important context and nuance into the AI to promote reliable responses, instead of expecting LLMs to deliver precise answers based on your raw data.

In addition, while other tools are solely focused on enabling an “AI chatbot” experience, Omni’s AI lets you do more than just ask questions and get black-box answers. AI in Omni can accelerate every part of your data analysis workflow – including creating queries, filtering, writing Excel formulas, and more.

In fact, AI is embedded into Omni’s workbook experience rather than being a separate interface, making it interoperable with the rest of Omni’s analysis methods. As you query with AI, you can drop into the point-and-click UI, SQL, or spreadsheet formulas at any point. Users aren’t locked into AI, even if that’s their starting point.

Customers can also embed Omni’s AI interface directly into their own product, or use Omni’s APIs and MCP server to customize further.

Can I test the accuracy of Omni’s AI?

Omni currently doesn’t offer a way to “test” the accuracy of responses. However, we encourage data teams to optimize their data for AI and experiment with adding context to deliver more reliable results. By using this human-in-the-loop approach, teams can continually evolve their data model for AI to deliver more accurate, relevant answers.

Why is your semantic model important to AI?

Omni’s semantic layer is the set of instructions Omni uses to generate correct, performant SQL. It combines your schema, relationships, and topics into a central place for AI to understand how your data is structured. This is crucial for returning accurate results, including safe handling for fanouts.

The semantic layer also gives Omni’s AI guardrails by scoping the data it can use. Based on the user’s question, it constrains the AI to relevant topics and fields. It even lets you encode business context explicitly in an ai_context field instead of letting the model guess.

It is also key to security and governance. Since data permissions and row-level filters live in the semantic layer, the proper security measures are enforced via user attributes on every query. Even when using AI, users only have access to the data they should.

For example, users may ask AI about revenue, but “revenue” rarely means one thing. Sales might mean booked revenue. Finance might mean GAAP revenue. Omni lets you define both in the semantic model as separate, named metrics.

Then, when someone asks AI a question about revenue, Omni uses the user’s attributes to pick the right definition. If the user is in Sales, AI answers using the Sales revenue metric. If the user is in Finance, AI defaults to the Finance revenue metric. If they explicitly choose a topic like Sales Revenue or Finance Revenue, that choice wins. Having this level of governance helps you scale AI to your organization without losing control.

How do you recommend setting up your data model for accurate AI responses?

Making your datasets AI-ready doesn’t need to be complicated – we’ve found that adding context in a few key places makes a big difference, and you can continue tuning up the model as you go. For tips, check out this how-to guide from our VP of Product Arielle, and watch our CEO Colin go from zero to AI-ready live.

How does Omni handle data security?

Omni never shares your data without your permission. When AI features are toggled on, only necessary data is shared with external LLMs. For example, when asking AI to generate queries, or write formulas, only query metadata (column names, sorts, limits, etc.) is shared. If asking AI to summarize a query, then the results set is shared. Learn more in our docs.