We didn’t set out to build an MCP server.

In fact, AI at Omni started as a handful of experiments with a lot of skepticism. Data analytics requires consensus, precision, and governance, and we felt this was at odds with AI, which can be…unpredictable.

While working on an initial text-to-SQL bot, we realized we could take a different approach to AI. The semantic model our customers had already built in Omni naturally helped AI return more accurate results where other tools couldn’t. What our customers build for great BI could also be used for great AI.

Since then, we’ve made big strides to help people analyze data with confidence using AI. Our latest step is the MCP server: a new way to use Omni’s semantic model and query engine inside the AI tools you’re already using, like Claude or ChatGPT.

For a quick overview, check out this video from my teammate Conner:

In this post, I’ll walk through what the MCP server is and how to use it. I’ll also share the path we took to get here — including the experiments, learnings, and customer conversations that shaped the journey.

Introducing Omni’s MCP server #

Omni’s MCP server lets you plug into Omni wherever you’re already using LLMs (as long as they support MCPs). Anyone can ask a question about their data in their favorite AI interface, and the LLM will “borrow” Omni’s capabilities to return a result governed by your business logic and security protocols.

Data teams can set up the MCP server behind the scenes, and everyone can simply ask questions and get answers.

Unlike traditional text-to-SQL approaches, Omni’s semantic model helps prevent hallucinations and protect against data leakage. With the MCP server, AI queries inherit both access controls and business context defined in your semantic model. That means users only see data they’re allowed to access, and AI responses stay grounded in logic your team has already built.

By bringing governed data closer to the tools and workflows people already use, you unlock new use cases without steep learning curves:

Marketing #

Looking to unlock a new revenue stream, a growth marketer wants to experiment with a closed-lost re-engagement campaign. She opens Claude and asks, “Who are our top 10 closed-lost accounts by ARR?” — which returns data from Omni via MCP. With that list in hand, she follows up: “Create a HubSpot email template for our closed-lost accounts.” Because Claude has access to their marketing email library and brand messaging framework, it can generate a ready-to-send draft she can copy directly into HubSpot, helping her move from idea to campaign in just a few minutes.

Sales Enablement #

A product marketer is updating the company’s core sales enablement doc to reflect recent wins. He first connects Claude to Google Docs so it has access to the existing content. Then, he asks Claude to pull a report of recent won deals using Omni via MCP. Once the results are in, he follows up: “Based on this data and the doc, what should we update?” Claude suggests positioning updates and fresh proof points, which he adds straight into the doc. Claude’s access to Omni helps him make sense of the data and add new insights without needing to bounce between tools.

Revenue Operations #

A month before the quarter closes, a CEO asks the RevOps team for a quick sales forecast for next quarter. Instead of running the whole forecast manually, the RevOps analyst opens Claude and says: “Create a forecast for Q3 using Omni data.” Claude calls Omni via MCP to pull key inputs like closed deals, conversion rates, and average deal cycle. Then it generates a forecast and rolls it up into a concise summary for the CEO, saving the analyst hours and keeping the team focused on company goals.

—

If you’re looking to get started, please reach out to our team to get an API key. Here’s a quick tutorial where I walk through the setup process, and our docs have more detailed information.

Our journey to MCP 🧗 #

Shipping the Omni MCP server was an organic process, driven by customer feedback. Every new AI feature made it increasingly clear that teams wanted more ways to use AI. And once we got going, we couldn’t stop shipping 😅

Early AI experiments: Start small, be useful #

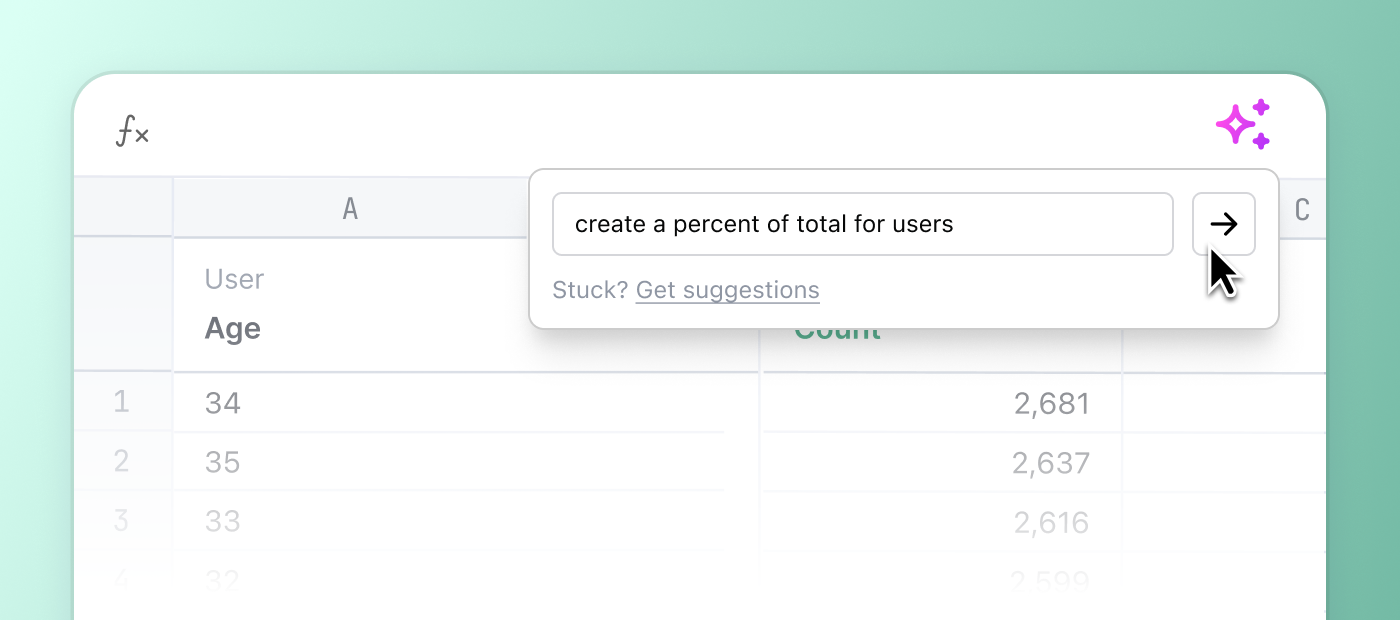

Our first AI feature brought natural language into Excel calculations. We didn’t trust AI to drive full analyses yet, but it was great at solving the classic problem of which Excel function to use. It’s still one of our customers’ favorite features, and it set the tone for how we approached AI — it had to be genuinely useful.

Adding natural language queries #

I joined Omni after we launched AI calculations, and seeing how helpful they were made me excited to explore more. By that time, LLMs had improved enough that natural language queries felt within reach.

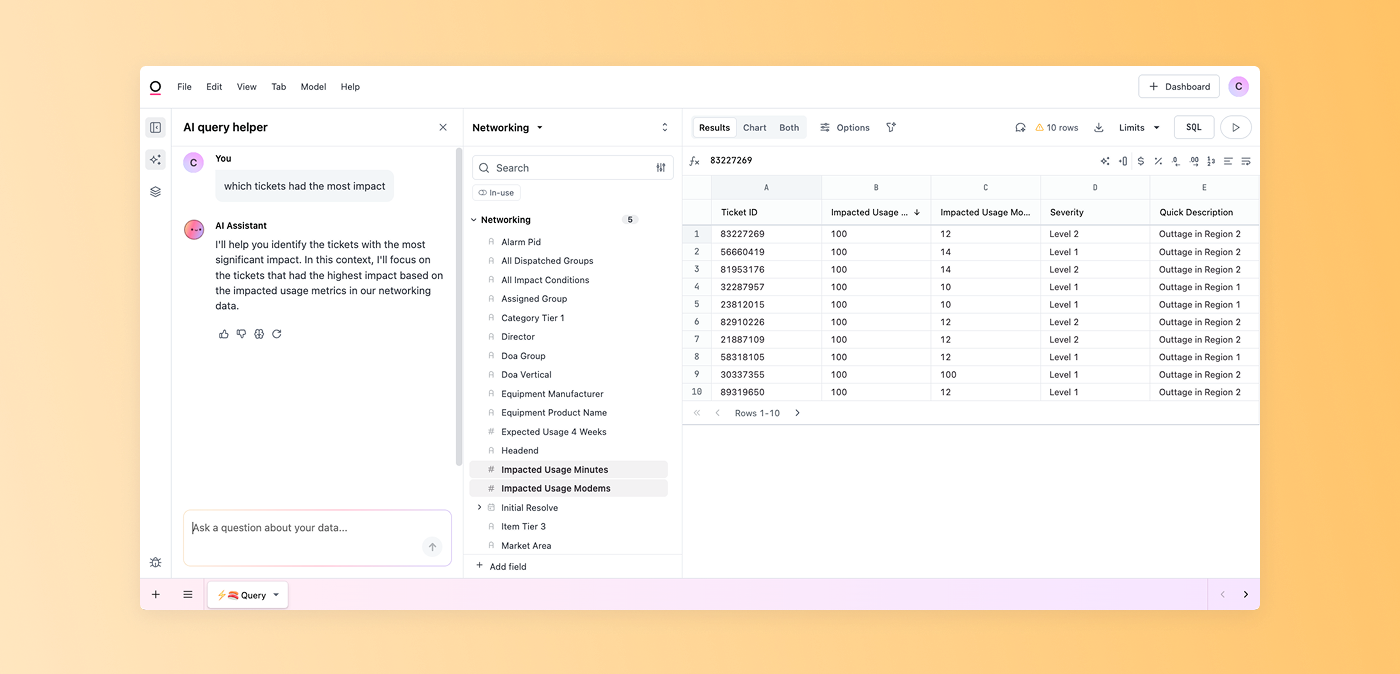

We added an AI query helper to the workbook. Users could type in a question and Omni would generate an interactive result they could filter, edit fields on, or use to build visualizations, just like a normal query.

At that point, we felt like there was real potential. Even if AI didn’t get it exactly right, having a solid starting point made a huge difference — especially for folks who aren’t always confident getting started with analytics.

Teaching AI through the semantic model #

A big unlock came when we started refining the semantic model to improve accuracy.

Just like you’d give Claude or ChatGPT instructions, we made it easy to “prompt engineer” Omni’s responses through the semantic model. We added tools to give AI context at the Topic (dataset) or field level, create sample queries, and control which fields AI could use.

This dramatically improved results — and once we saw that, we started using AI a lot more internally, too.

Building chat and meeting Blobby 👋 #

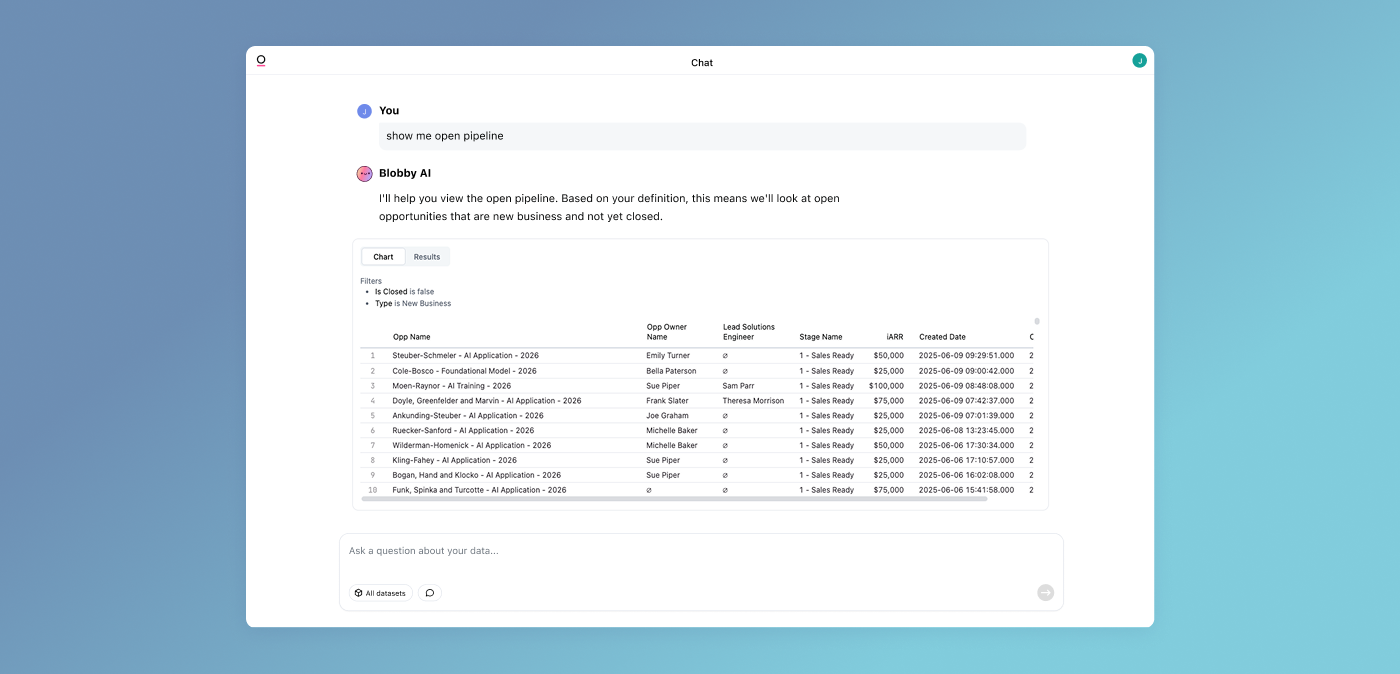

As we talked to customers, we discovered a pattern: analysts were loving AI, but less technical users still wanted an easier entry point. They asked, “Can AI just be its own interface?”

Once we were confident in AI’s performance inside the workbook, we launched our chat interface with Blobby, our AI helper. It was designed so anyone could ask questions and get high-quality charts and answers, without needing to dive deeply into Omni unless they wanted to.

Expanding to APIs and customer-facing apps #

As we kept adding AI features, customers started asking for them in their own products. It was a natural next step to extend our embedded analytics offering and APIs to support AI.

Some customers started embedding analytics with AI directly into their products. Others created new premium tiers with AI features built in. It’s been exciting to see teams, like Standard Metrics, differentiate this quickly by using what we built.

👆 Demo from Standard Metrics of their AI-Powered Portfolio Intelligence for VCs

Building the MCP server #

It wasn’t until we shipped our AI APIs that we could see for ourselves how MCPs were the most exciting and accessible implementation of natural language analytics we’ve seen.

Getting the MCP server up and running wasn’t trivial. Until this point, our AI suite of tools had been tightly coupled with Omni’s web-based frontend.

The MCP server had no access to our React app, which created roadblocks — like the retry logic we use when AI-generated queries fail. Originally, query validation happened on the client. If a query failed, the frontend would send a request back to the server to try again with new context. But the MCP server didn’t have this client logic. We had to completely re-architect how retries worked.

…And that’s just one example of the hiccups and re-work we needed to tackle 😅

But in the end, it made our foundation better. Now, whether you use AI in the workbook, via chat, or through the MCP server, it all runs on the same core implementation and delivers the same high-quality results.

The journey continues #

So that’s our journey to building the MCP server and a quick look at how we think about the development process. It’s been wild to think about how far we’ve come so fast. But I’m excited to keep going, and you can join us for the ride by watching our weekly product development demos.

The Omni MCP server is available today. Reach out to our team if you’d like to set up your own instance. You can learn more about getting started from our docs and this quick setup tutorial I put together.

As always, please keep the feedback coming. This is the result of customer requests and feedback, so thank you for continuing to help us make Omni better 🙌

Gustav Staprans

Gustav Staprans