For data leaders, the excitement around AI often comes with high expectations: “Can AI tell us what to do next?” “Are dashboards dead?” “Is this data 100% accurate?”

On the one hand, AI can accelerate the true mission of a data leader: find the signal in the noise, so your team can act with confidence, speed, and accuracy.

It’s why we love ChatGPT. We don’t see thousands of reviews, service records, price comparisons, and crash test ratings. It just recommends the car to buy. The detail’s there if you need, but the bottom line’s up front.

It’s reasonable to want the same at work. Just ask AI which customers need help, how your feature launch is going, or how much cash you need for next month’s invoices.

But enterprise data has never been that easy. Most of us remember past hype cycles from innovations whose impact fell short, not due to technological inadequacy, but rather the complexities of people, business, and data.

Will AI be any different? We think it can be – but only with careful management.

In this blog, I’ll share a framework to make your AI efforts a success, and advice from some of the smartest data leaders I know.

First, some guiding principles #

Before diving into implementation, I want to share a few themes I see come up again and again when teams start exploring AI analytics.

Principles to steer with #

Fast forward to outcomes. Technology itself is never a goal, and even “improved self-service” is questionable as a business case for AI. Instead, start with the action or decision a user wants to take, and work backwards from there.

Build for trust. Make it easy for people to understand where results come from, and give them tools to inspect or improve what the AI returns. Trust is built over months, but can be lost in an afternoon.

Start simple. You don’t need to roll this out to your whole company on day one. A small number of high-quality use cases goes a long way.

Don’t sacrifice power. AI needs to be grounded in your real data and business vocabulary. When paired with a semantic model, it can handle complex questions without going off the rails.

Pitfalls to avoid #

AI may be shiny and new, but the risks will be familiar. Don’t underestimate them.

Unrealistic expectations. With AI, it’s tempting to expect magic, especially when the interface is as easy as typing a question. But AI is imperfect, and getting the most from it is a skill.

Unclear goals. Without a clear understanding of what “successful” AI adoption looks like, it’s easy to lose momentum or fail to gain sufficient resources.

Skipping the basics. AI relies on a quality semantic model, so you’ll want to ensure your data hygiene, naming conventions, and access rules are solid. Garbage in, garbage out.

Lack of trust. If someone gets a confusing or incorrect AI result early on, they’re unlikely to come back. Establishing trust is crucial for adoption.

These principles have also shaped how we’ve built AI into Omni, and more importantly, can guide you through the process of a successful rollout:

#1: Set your goal #

When you’re first getting started, this is where I recommend spending most of your time. There’s a bit of upfront work involved, but getting this right makes everything downstream easier.

Define the opportunity #

Good leaders know that data is a means to an end, never the goal itself. AI doesn’t change that. Before you touch a keyboard, you’ll need to understand what your potential users are looking to do.

I’m partial to the classic framework “as user X, I want to know Y, so that I can Z”. If Z isn’t a clear action (or action space), you haven’t gone deep enough.

You might hear these examples from other department heads:

Growth teams live in the world of experimentation. They want to deploy and measure experiments faster, so they can know where to double-down and where to change course.

Customer Success teams are always overloaded. They need to triage opportunity and risk as quickly as possible, so they can work with the customers where their time will have the greatest impact.

Executive teams don’t have time to read through every permutation. They need to know what’s on-track, what’s looking off, and who to call.

Then you’ll need to pick somewhere to start. It’s best to go end-to-end with one group first, rather than taking the whole company at once, so you can build early wins and refine your playbook. Your ideal pilot group will have clear action targets, data that’s well-understood, and a can-do attitude.

When working with one of our customers, Feeld, to set up AI in Omni, I chatted with their Director of Analytics Bobby den Bezemer about his approach:

“I have always found it to be effective to work quite closely with your data power users or citizen analysts, who are using data most or have the highest levels of data literacy. Usually they also have quite a high 'can do' attitude and are willing to try new things.

It can be quite effective to run an experimentation program especially in the early days. Say you've got a rough strategy around rolling out AI analytics, you have identified your power users and know where they are. Why not run an early roll out as a pilot/experimentation program ideally in the business domain with the highest % of power users? This is likely to get you tons of learnings to feed back into the strategy.”

Identify a realistic first set of questions #

For your target group(s), identify the kinds of questions that come up all the time, or that take over 3 clicks to answer. Write them down until you can’t think of any more. Then ask department heads and a set of users to do theirs. Best yet, do it all together on a whiteboard.

Then, go from the top and mark anything that feels like “This is pretty clear, a machine with a bit of context should be able to answer this”.

This will probably cover 70–80% of the potential value in your early days. You’ll be shocked at how simple most of these questions are:

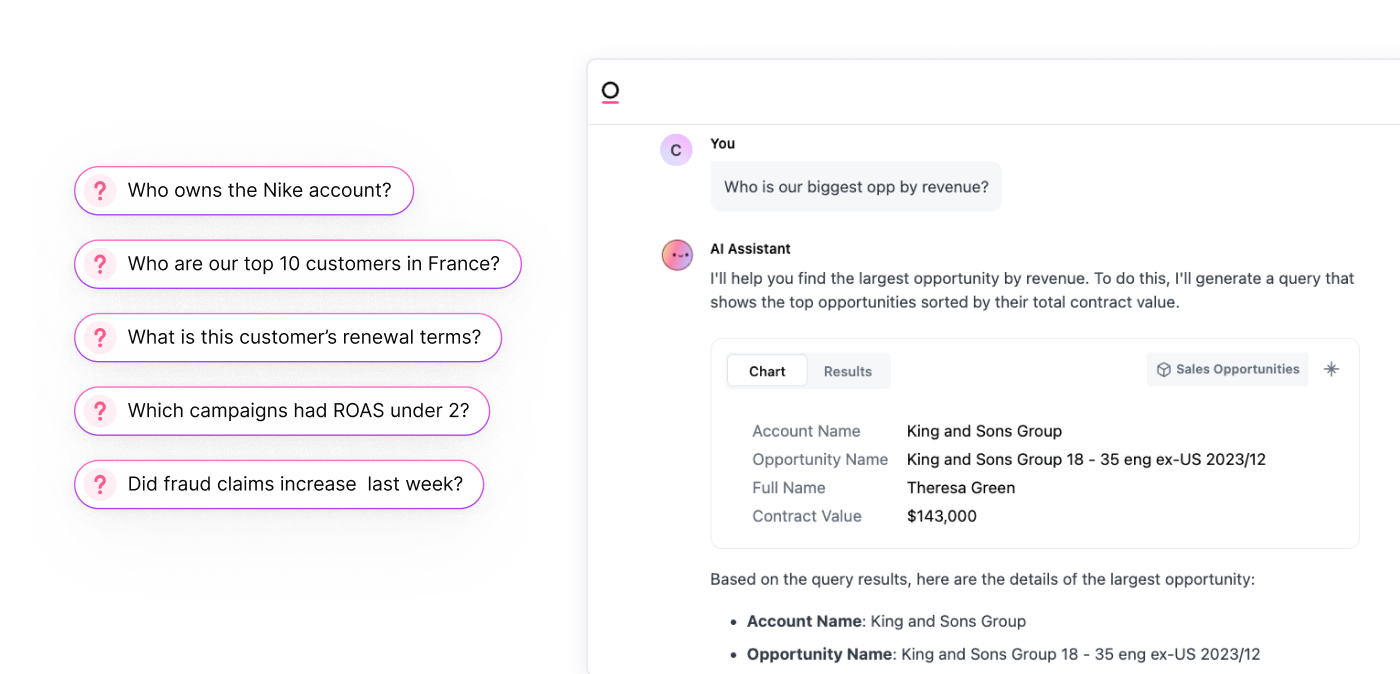

“Who owns the Nike account?”

“Who are our top 10 customers in France?”

“What is this customer’s renewal terms?"

“Which campaigns had ROAS under 2?"

“Did fraud claims increase anywhere last week?”

“Which invoices are due this month?”

“Which customers are underemployed on their contract?”

“Who hasn’t switched to the new version yet?”

“Which SKUs are almost out of stock?”

“How much have we been spending on whistles?”

Complicated? Not especially. But super high impact. For a user, having these answers at your fingertips makes everyday action a breeze, and simple action beats never-ending analysis any day.

We’ve heard this firsthand from Mitchell Hayes, Data Product Manager at Ordermentum, who highlighted the importance of speed:

“I was in a meeting with our product team, and someone asked a question about recent orders, so I just asked Blobby. We got an immediate answer. I could have gotten that answer several ways, but being able to quickly type in a question in natural language while still being able to follow along and participate in the meeting was pretty amazing.”

Moreover, these simple questions don’t require much interpretation. They’re easy to QA, built on familiar tables, and have clear answers, not synthetic scores or ML outputs.

Later on, you can expand into diagnostic questions like “Why did usage drop last month?” or “What’s driving churn?” But at the beginning, it’s helpful to stay in territory where answers are clearly right or wrong.

#2: Build your foundations #

Set up a clean, simple data model #

Semantic layers are critical for accurate AI. Most AI tools generate SQL under the hood, and while AI can write syntactically valid code, it won’t know the nuances of your database and business vocabulary. You also shouldn’t rely on context prompts, which are probabilistic in nature, to enforce access rules. A semantic layer forces your AI to stay within your trusted boundaries.

You don’t need a fully complete semantic layer to get started. But it helps to clean up a few core pieces:

Start with key datasets or Topics first. Focus on the most common tables and fields that support your scoped use cases. You might have a long tail of fields for less common questions. Be aggressive paring that down for AI; hiding unnecessary detail gives fewer places for AI to misinterpret a question or data point.

Confirm your join paths. Make sure the most common joins are clear and consistent. This helps AI tools avoid ambiguity and generate better queries. If different join paths yield different interpretations, like a user who can be either a buyer or seller, make that explicit.

Standardize where you can. If there’s only one way to define deal stage or gross margin, AI models will pick it up much faster and more consistently. But it’s usually more complicated – we’ll get there next.

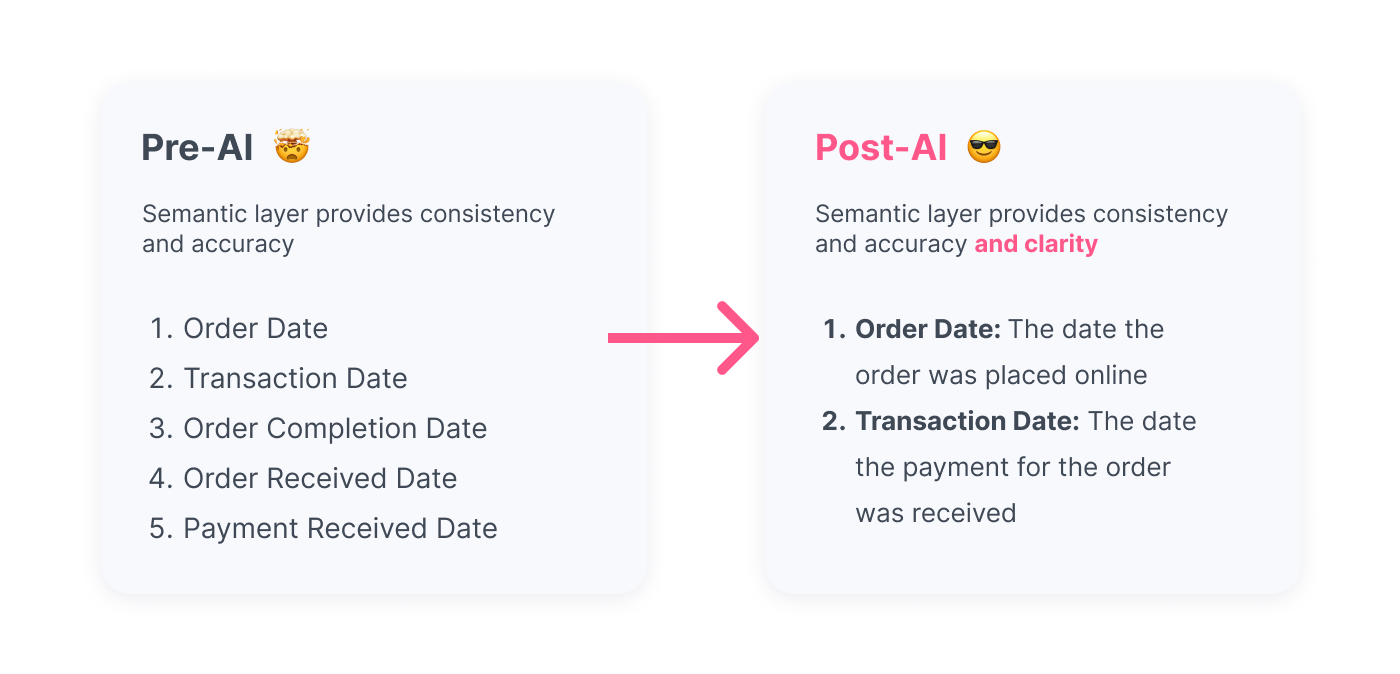

One of our customers embeds Omni into their customer-facing data product to help them understand a range of transactions. However, with multiple versions of transaction dates, it could be confusing even for the data team, let alone their customers. Here’s how they used AI to simplify:

There are a few ways to do this – you might rely more on in-database modeling with dbt or SQLmesh, a semantic BI tool like Omni or Looker, or something else entirely. But don’t skip it.

Describe the nuance #

There’s always either ARR vs Revenue; Users vs Accounts; Downloads vs Activations; Hedged vs Unhedged exposure… you know the drill.

If you wouldn’t expect a new hire to get it straight away, why would you expect your AI?

The addition of metadata and context in a few strategic spots will dramatically boost the accuracy and precision of your tooling:

Synonyms. Every business has its own language. A rep might be

sfdc.opportunity_owner. Trials, POCs, and S3 Opps might all be interchangeable and mapped to the same metric:count distinct(case when accounts.first_connected_at not null then id else null).Sample values. For fields like product_tier or region, providing example values (Enterprise / Pro / Starter; AMER / EMEA / APAC) helps the model understand the shape of the data. That way if someone asks for “Europe” but your data is cataloged as “EMEA,” the LLM can connect the dots.

Sample questions. If there’s a specific way people usually phrase something (“Who manages [customer]?”), logging the question and expected response as an example can improve future results.

Descriptions. A short sentence explaining what a table or field represents often makes a big difference. For example, users might ask about “closed deals��”. Filtering for “is_closed” would probably include wins + losses, but a reasonable human would probably interpret the question to mean wins. In tools like Omni, you can do this by adding a line to your topic’s AI context: "If asked about closed deals, most often the user means both closed and won."

Ordermentum took the time to build their business language into their AI, and found that it was critical for trust and adoption, all the way up to their CMO, Sophie:

"Our data team has aligned data properties with the terms we use every day, and now I can just open up Blobby (Omni’s AI assistant) and ask exactly what I’m looking for – like ‘Who were our top suppliers last month based on GMV growth?’ The level of detail is excellent, and honestly, it’s actually fun to use."

Test and iterate #

At this point, you can start running your AI chat through some questions. But unlike traditional BI, LLM’s can’t give you a definite “all done” signal that your model is fully ready. You’ll need to test and iterate.

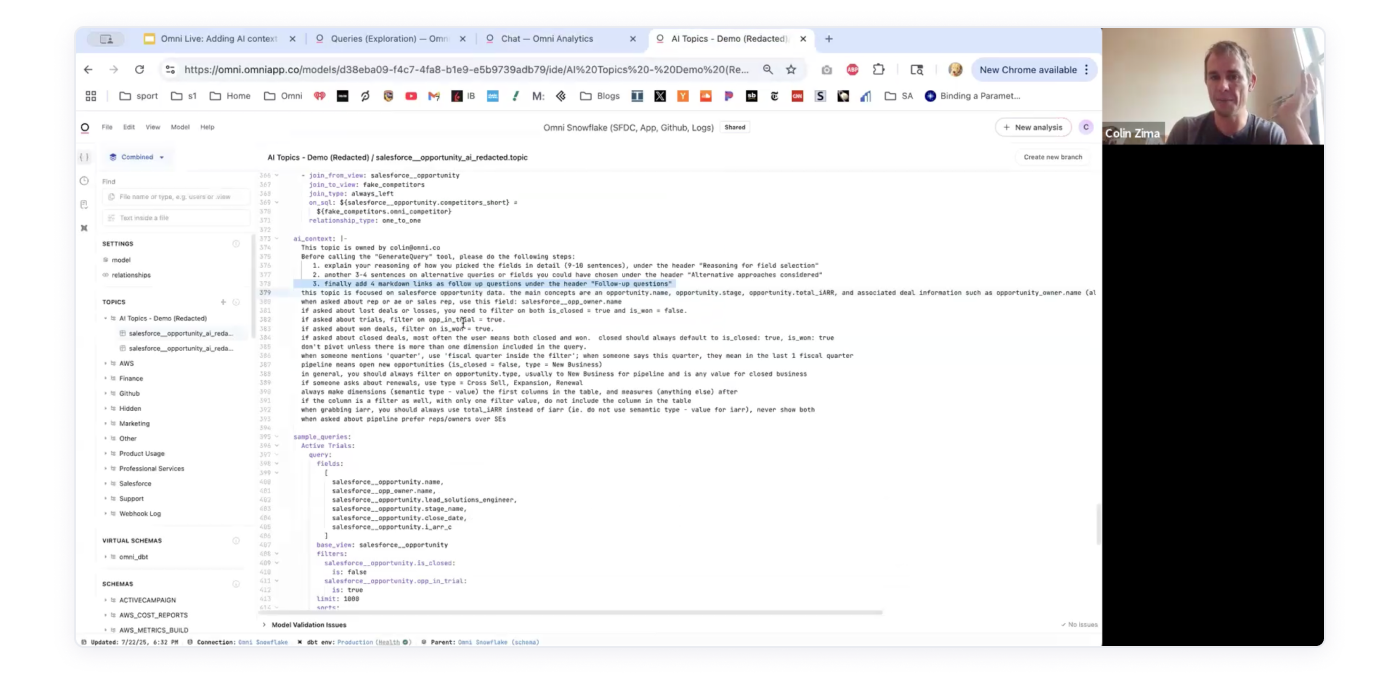

Fortunately, you already built a list of sample questions the business might ask. Use that to test questions, sense-check the answers, find surprising nuance, and refine the context until AI gets it right.

Our VP of Product, Arielle, compiled our recommendations for adding AI context into this guide. And our CEO Colin demoed the entire process live. I’d highly recommend watching it here.

#3: Grow the surface area #

Beyond asking basic questions with AI, you can use more tools to help drive insight to action even further:

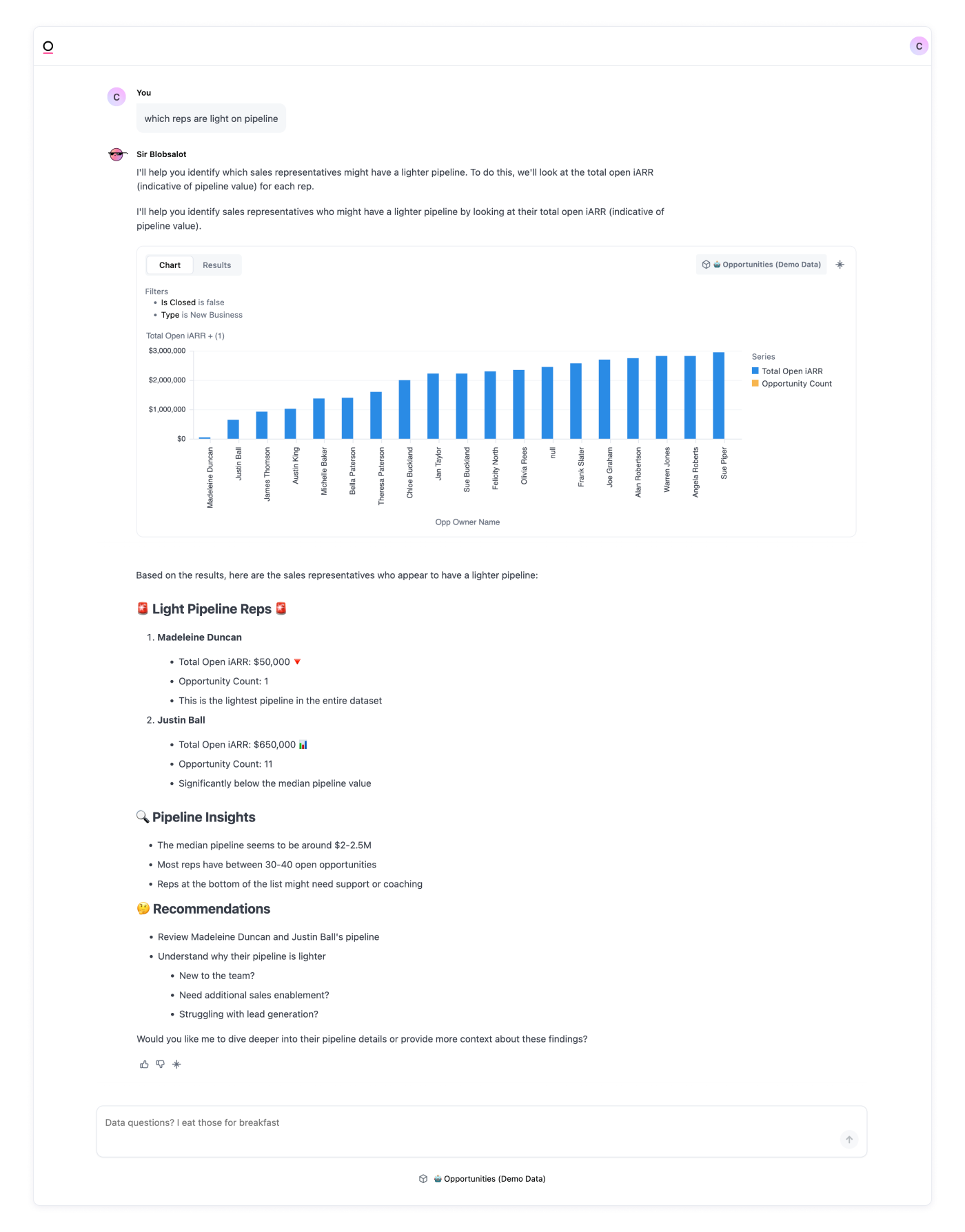

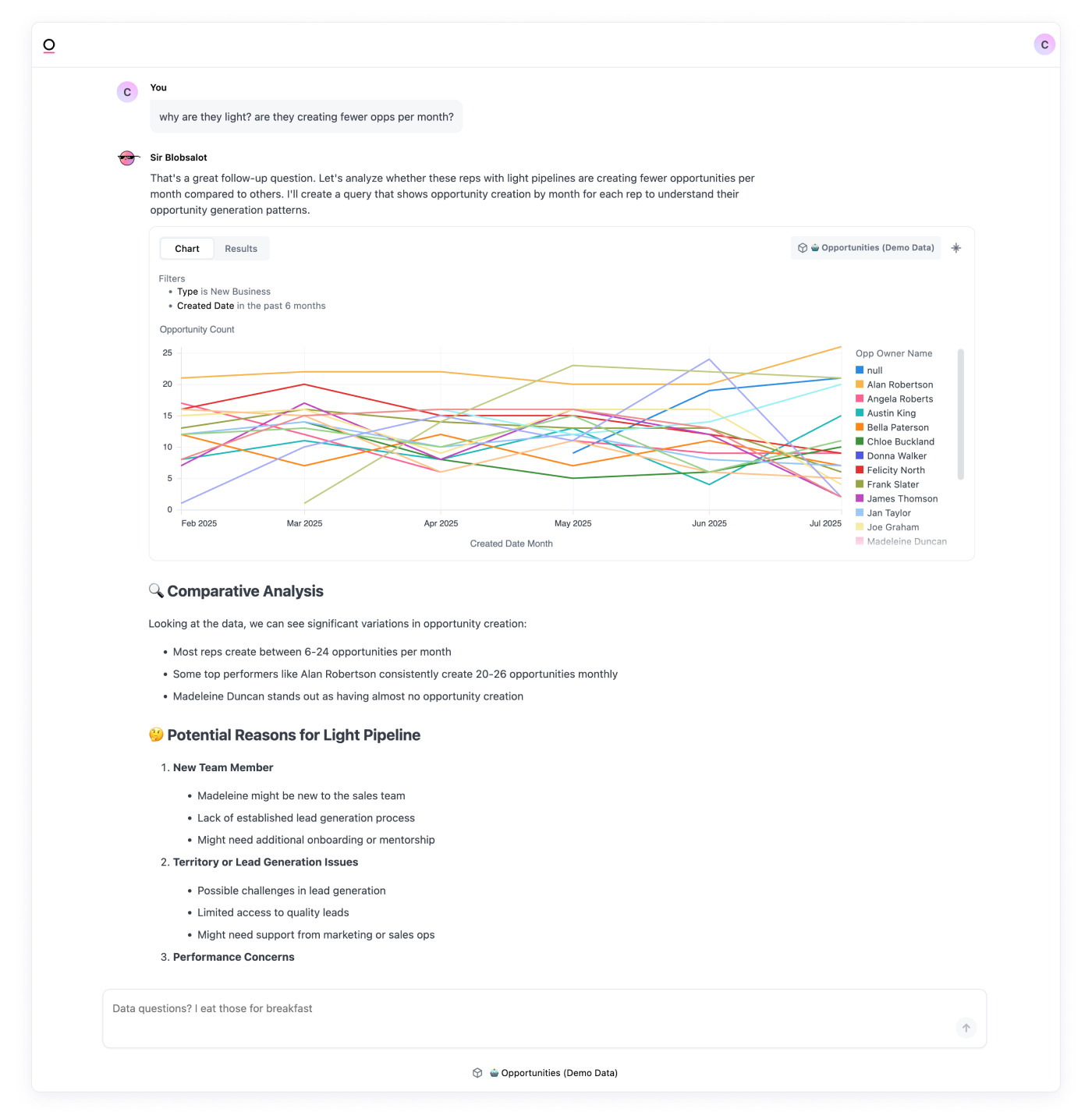

Recommend an action #

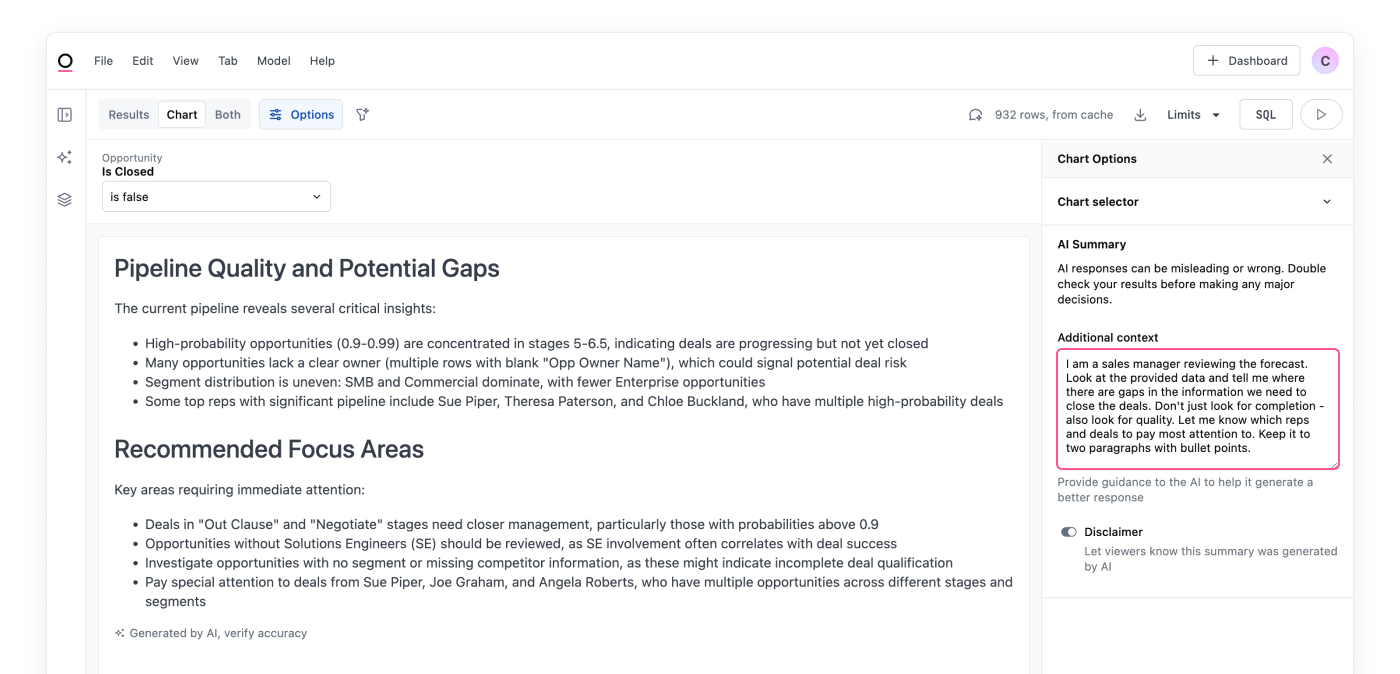

Instead of putting the burden of interpretation on users, AI can give you the bottom line. Optionally, you can instruct AI on specific trends to look for and actions to recommend.

For example, Balthazar Villey, data & performance manager at the software company Oodrive, built an AI summary to help sales leaders spot risks in their pipeline based on a synthesis of several fields. Below is an example we recreated using demo data:

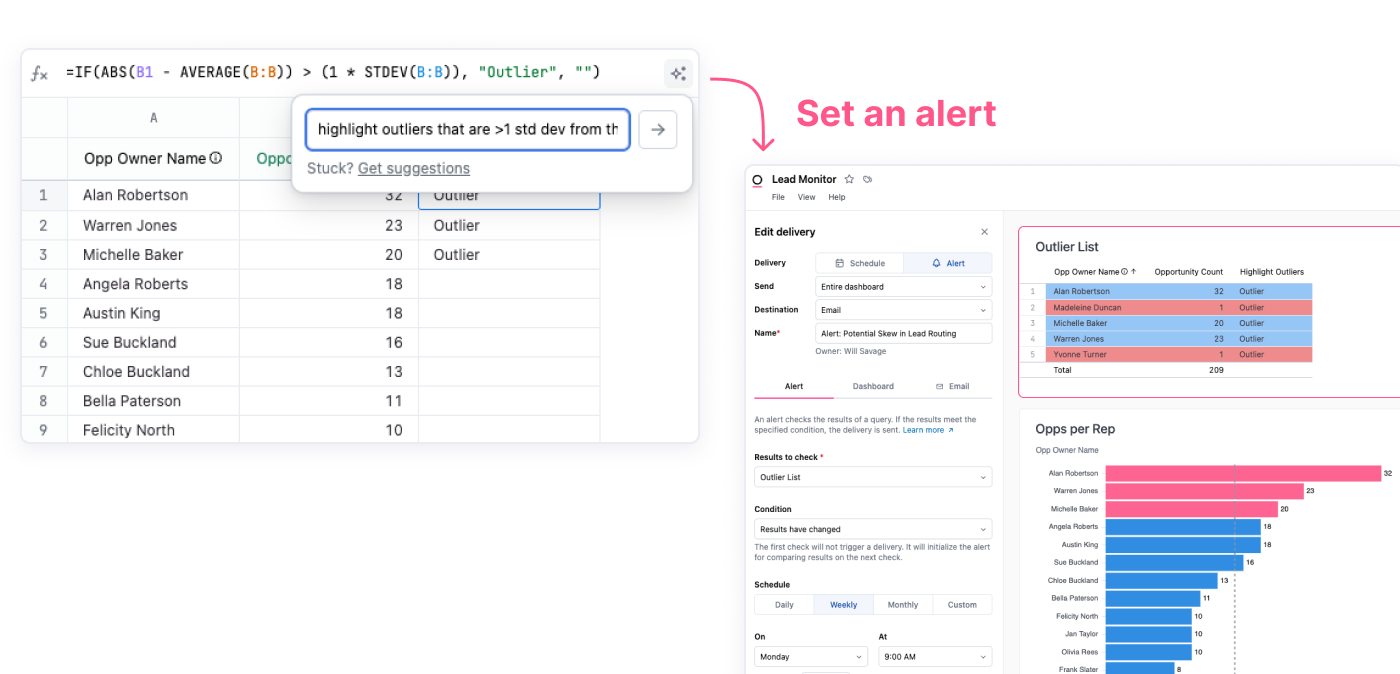

Lock in the gems with alerts and scheduled reports #

AI might help you answer a question or find the unexpected.

One of the most exciting things about AI analytics is how fast it can help you get started. It becomes even more powerful when you can solidify the analyses, reports, and charts it helps you create. Instead of one-time results, they become established charts. Non-deterministic results become deterministic!

If you’ve found something unusual and want to be notified if it happens again, you can use the rest of your BI toolkit to set up alerting and monitoring in your tool of choice:

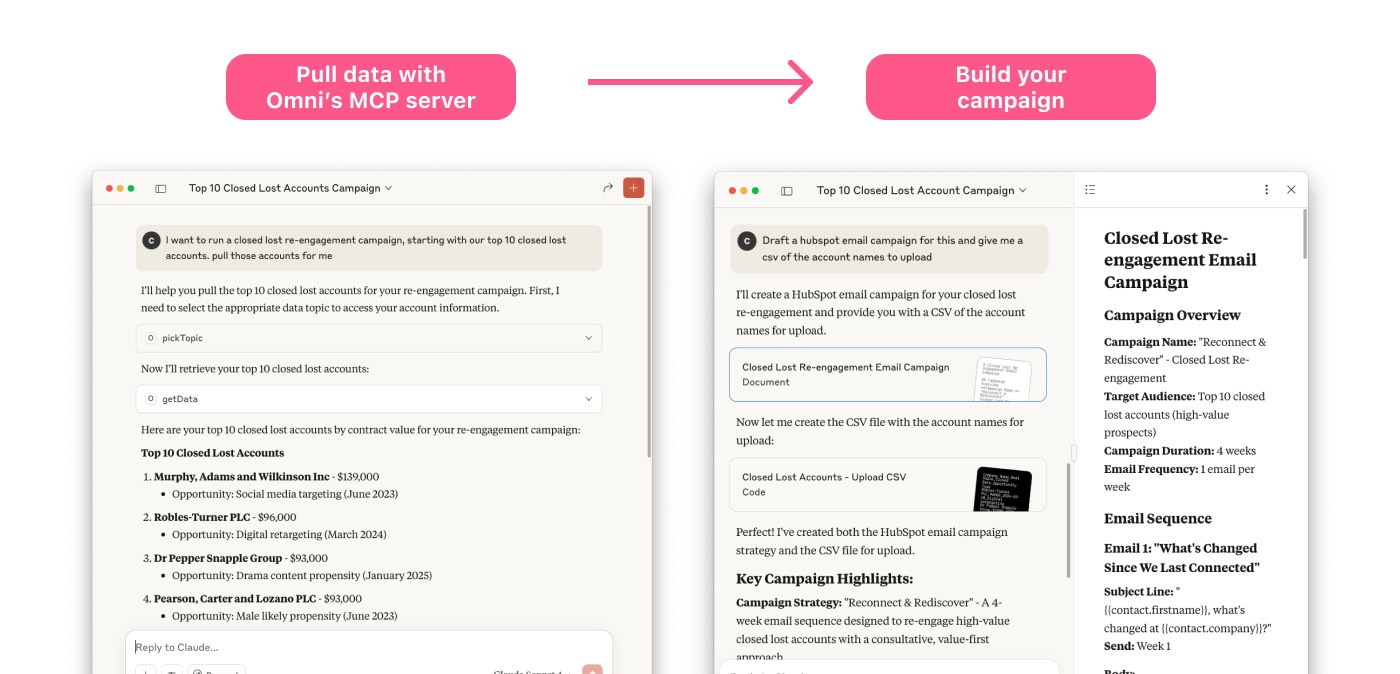

Link AI tools together with MCP #

There are probably other AI initiatives or experiments in the business, like internal productivity tools or customer-facing chatbots. You can stitch your AI tools together to create a unified experience, driving adoption and action.

For example, your AI might find users who are a churn risk. Then using an MCP server, it can hand its work to another agent to propose ideas for a re-engagement campaign, and another to build your creative.

We’re still early in the days of multi-AI agent interaction, but the potential is incredible.

#4: Empower your team #

AI can feel magical once you get the hang of it, but you still need to show people the way. As Bobby from Feeld puts it:

“It's also quite important to enable the team from a skills perspective. Not everyone is good at formulating/asking AI questions. Any rollout should go in tandem with some basic people enablement: Simple prompt engineering, and a refresher on the metric/concept landscape of a particular domain.”

Here’s an example of a workshop structure to bring your level your up team:

I. Inspire with real questions. Ask for some questions they’ve tried to answer in the past several weeks, or use your list from part 1, and go through them together.

Make sure to use real business language. It’s okay to use abbreviations, misspellings, and the jargon you’ve codified in your model – talking like a human will make the tooling feel less intimidating.

II. Coach users to ask questions effectively. For more complex questions, AI works better in parts (just like our brains). In your examples, show the start of a question to set the context, then ask the follow-up.

It’s also helpful to coach troubleshooting. If AI goes down the wrong path, it’s okay to refresh and start again. It’s easier to set the context at the start of a chat.

Finally, if your tool allows, show how to take over manual control to audit or directly modify the question – for example, in Omni, you can pop open a workbook to go deeper.

III. Let them try. After you’ve demoed some examples, let the users try a hands-on lab. I recommend giving them at least 2 sample challenges: one that’s a simple question, another which might require hypothesis and follow-up. Have an ‘answer guide’ or ‘day in the life’ set of questions to follow along.

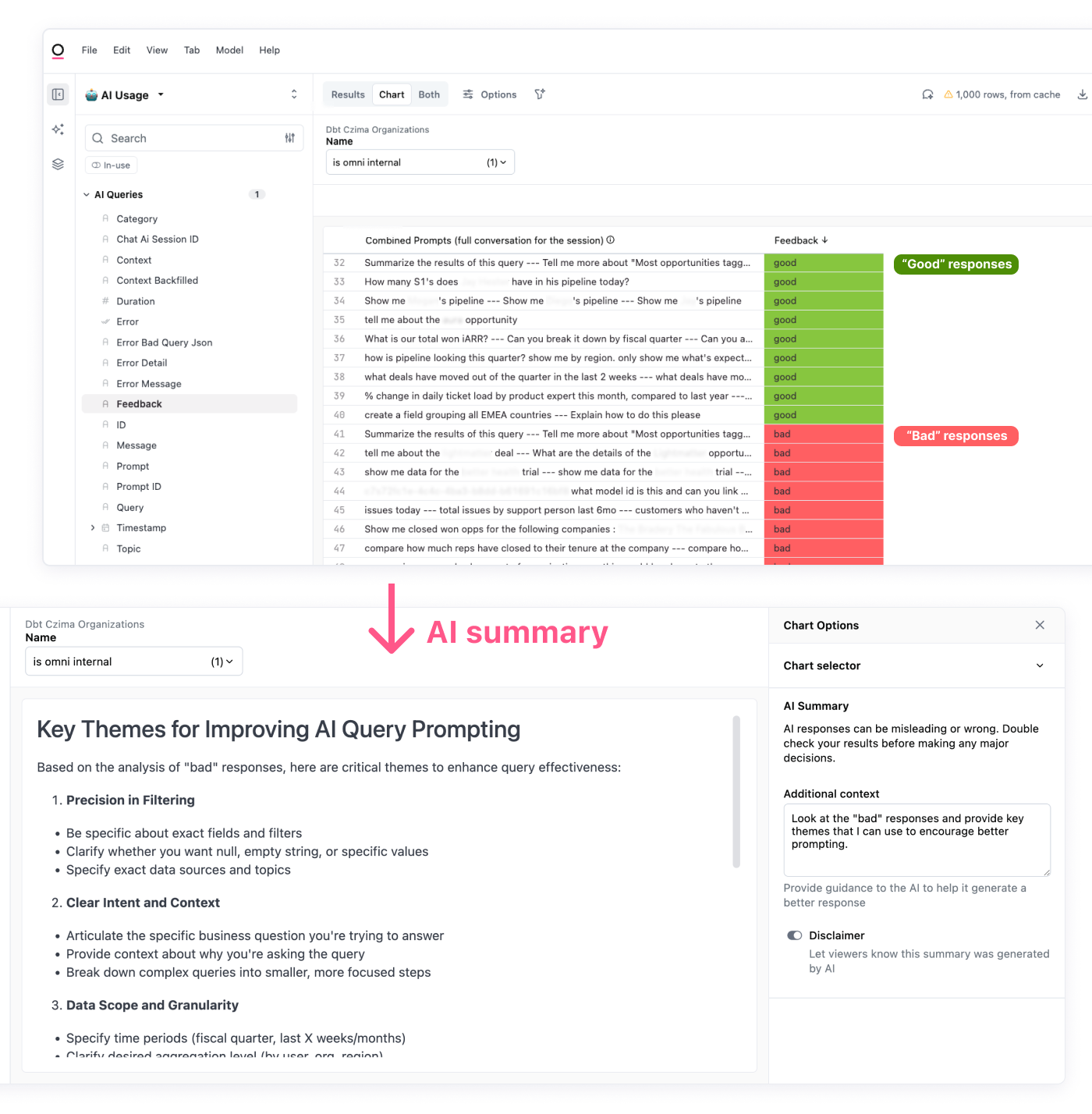

IV. Encourage feedback. A simple thumbs up or down on response quality tells your team where to keep improving, so encourage your team to mark it every time. You might even show your usage + feedback stats so users see how quickly their feedback can make a difference.

When revamping our internal analytics, our CEO Colin sent this message company-wide to remind us how important AI feedback is:

V. Remind people that there’s still work to be done.

Sometimes the most important bit of data is the one that isn’t there. AI can help cut through the noise, but the most important tool is still your brain: it gives you curiosity, hypothesis, and interpretation.

I like to use the classic story of 2 shoe salespeople sent to research a new market, who find that nobody is wearing shoes. The first reports back to HQ: “Situation hopeless, nobody here wears shoes”. The second reports back: “Golden opportunity, they don’t have shoes yet”.

AI might be more like the first salesperson who just reports the facts. Encourage your team to be more like the second salesperson – take the data and figure out what it means in the real world.

#5: Measure and improve #

Encourage + gamify feedback #

Users can be good citizens by rating responses; a simple thumbs up or down is an enormously valuable signal for your team. Consider publishing an AI leaderboard, or giving out “Blobby Badges” and other fun prizes to top users and feedback providers.

Tactical basics, like office hours and Slack channels, can also help users help each other and share wins (and roadblocks). At Omni, we have an #omni-omni-analytics channel for questions specifically related to internal data questions.

Look at usage patterns #

Good analytics tools should give you plenty to measure on the back-end. You should treat this like any other product analytics project, paying particular attention to:

Who has tried using AI? Did you train them or is it organic? It’s great if you have organic spread – make sure you are paying attention to quality and enablement.

How is your stickiness? Low retention is an obvious warning sign: check question logs for unhelpful answers, ask users if they didn’t get what they needed, or share success stories more widely. Likewise, check in with your top users; they might have great ideas on how to reach their peers.

What are the popular domains? Are people asking about the areas you’ve built out, or something else? This can tell you what to build next. AI text summary tools can help you summarize the logs.

Pay extra attention to detractors. It’s easy to lose trust if answers are incorrect. Read every single negative feedback log entry for as long as you can, and reach out to users when you push a fix.

And yes, you can use AI to help! Tamara Dalhuijsen, AI Transformation Manager at Superside, uses AI to triage her teams’s efforts:

I use Omni AI to build and track AI adoption across the business. Setting up AI adoption departmental metrics used to mean a lot of manual calculations – downloading CSVs from our old tool, uploading them into Excel, running formulas, and then making assumptions. Now I can create those same calculations directly in-app, and I’m always working with real-time data from the actual source of truth.

Pick up the phone #

When Lloyd Tabb hired me at Looker in 2012, he taught me there’s no substitute for simply talking to your users. Whether you watch in silence as they use your product, or let them ramble about what works and doesn’t, you’ll learn more than any instrumentation can tell you.

“Testing is monitoring without interacting, but software is about getting people to think and behave in new ways. Put down the binoculars. Pick up the phone and call.” - Lloyd Tabb (source)

You’ll see what’s working well, what questions they’re still hesitant to ask, and what they wish the tool could do next. This feedback is also a great way to uncover small blockers that aren’t always obvious in usage data, like unclear naming or filters that aren’t quite intuitive.

A few questions that can jump start the conversation:

What’s been the best experience you’ve had with AI so far? What’s been most frustrating?

Where are you getting stuck?

Do you trust the results? Why or why not?

What would you like it to do next?

Wrapping up #

AI hasn’t changed a data leader’s north star: giving the business clarity and confidence to act. But it has made that goal more realistic than ever. The best data leaders do it by leading with clear and simple action targets, building for trust and accuracy, and helping their users each step of the way.

We’ve seen incredible results amongst our customers making data easier to use than ever. If you’re curious how it might work for you, I’d love to chat (will@omni.co).

Will Savage

Will Savage